The AI Sandbox: scaling Intelligent Processes in Banking

- alexwelsh6

- 2 days ago

- 6 min read

There is little need to convince leaders in regulated industries that Generative AI presents a significant opportunity to optimise operations, reduce costs, and unlock new capabilities.

In financial services in particular, AI is already influencing decisions across customer engagement, risk assessment, fraud detection, and internal operations.

Across product, design, and technology teams, the conversations have moved quickly from if AI should be adopted to how it can be deployed responsibly and at scale — without compromising regulatory compliance, customer trust, or decision integrity.

What remains less clear is how organisations can be confident that AI systems are making the right decisions: decisions that are explainable to regulators, auditable under scrutiny, and aligned with both customer outcomes and evolving regulatory expectations.

In highly regulated environments, this confidence is not optional; it is foundational to sustainable AI adoption.

What holds organisations back

Large organisations operating under intense competitive and regulatory pressure are uniquely positioned to benefit from AI.

Yet many are attempting to apply it using traditional product models and agile delivery workflows that were never designed for probabilistic, learning systems that continuously evolve.

This mismatch creates friction. Promising pilots struggle to scale beyond controlled environments, risk and compliance teams become unintended bottlenecks, and confidence in AI-driven decisions erodes before meaningful value is realised.

Underpinning this is a very real concern: the fear that AI adoption could introduce regulatory risk or compromise customer trust and market integrity. In regulated industries, this uncertainty often leads to cautious experimentation - or stalled progress - rather than sustainable, enterprise-wide deployment.

AI Sandbox

What is an AI sandbox?

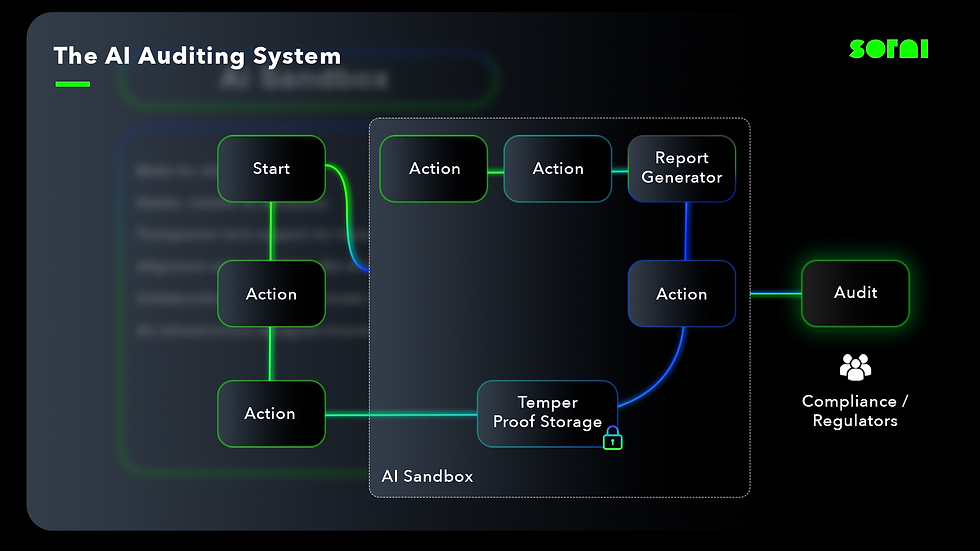

An AI sandbox is a controlled, observable environment where AI systems can be designed, tested, and evaluated before being exposed to customers or critical business processes. It enables teams to safely explore AI-driven decisions without compromising trust, compliance, or brand integrity.

At Sorai, we develop AI Sandboxes that are strictly grounded in Explainable AI

Explainable AI is not something to be “added later.” It must be intentionally designed into the sandbox itself, from decision logic and model outputs to dashboards, alerts, and escalation paths. This allows product, risk, legal, and technology teams to share a common view of how and why AI behaves as it does.

What are the regulators saying?

Regulators themselves are signalling that responsible experimentation with AI is now integral to innovation, but it must be grounded in customer and market integrity.

Our discussions with the Financial Conduct Authority (FCA) have been explicit that AI should be governed within existing accountability frameworks, such as the Consumer Duty and Senior Managers & Certification Regime, rather than waiting for new, prescriptive rules.

The FCA is actively supporting firms through initiatives like its AI Lab and Supercharged Sandbox, which provide controlled environments for testing and refining AI tools with access to synthetic data, enhanced computing resources, and direct regulatory engagement.

Taken together, this points to a structural shift in how AI capability is developed and governed. Rather than monolithic, end-to-end systems, regulators increasingly expect firms to operate within modular ecosystems — combining specialised partners, clearly defined responsibilities, and strong oversight at each point of the value chain. As one senior regulatory leader put it:

We’re going into an era of fragmentation. It can end up being much easier to control because you partner with people who are extremely good at one thing, and the sum of the parts will be better. This is the ecosystem model that I believe we’re heading for.’

– AI Lead, Financial Regulation

Similar to this, Sorai’s sandbox programme is designed to help firms understand risks such as bias, explainable AI, and operational resilience before wider deployment and to reinforce that boards and senior leaders remain ultimately accountable for AI outcomes in products, services, and regulatory processes.

To see how this works in practice, request a demo of SORAI’s AI sandbox by emailing niharika@soraiglobal.com

Getting the foundations right before you build an AI Sandbox

We believe there are three areas to consider to ensure that we are building AI solutions in a manner that upholds both market and customer integrity.

Human experience design: Where most AI projects don’t succeed is that they consider the employee and user experience as a bolt-on. The focus largely remains technical. Simply put, designing AI processes is designing for trust and integrity, which are essentially human experiences. We’ve learnt that the first and the most important step is to understand how teams work, how users engage with processes and services, followed by determining where the use and relevance of AI, human intervention and trust needs to be designed for. This understanding becomes the blueprint for how the metrics, process flows and governance models are developed.

Governance models: Governance sets the foundation for how AI and people work together to deliver the service and make decisions. Governance consists broadly of two key parts:

Ethics, trust and transparency - Designing and governing systems so decisions are fair, explainable, accountable, and trusted by people and regulators.

Roles and responsibilities - Clearly defining ownership, decision rights, and accountability across teams for how systems are designed, used, monitored, and improved.

Human oversight is critical for trust and accountability. However, without the right governance, it can introduce high cost, operational drag, and decision latency. Well-designed AI sandboxes deliver their greatest value by providing confidence in AI decision-making, enabling the business to focus on ensuring that human oversight/review is in place where it's needed.

Management of AI processes

It is fundamental that all AI processes are designed with human oversight and interventions. This means different things to different organisations depending on their size, maturity, and team setup. When designing processes, we focus on not just the experience, needs and pinpoints but also on where human interaction and interventions will be needed. Operational visibility across performance, bias, drift, and decision quality, not just technical metrics, becomes key. These are then designed with a focus on roles, responsibilities, incentives and role modelling – a framework that is proven to lead to a sustainable operating model.

Businesses should consider training and upskilling their teams continuously, not just on the new processes, but to enable a greater and more effective collaboration between these new AI-enabled processes, the evolution of workflows and the governance model.

How to get started

It is easy to feel overwhelmed when undertaking AI transformations, which is why the AI sandbox is a great way to start.

It gives you a secure play-bed to try out your processes to ensure that your AI models are delivering the decisions in a compliant manner before rolling them out.

Three practical ways to get started are:

Link strategy to implementation and capability: Align AI ambition with delivery realities and organisational readiness.

Start with a specific, validated use case: Prioritise use cases with clear decision boundaries, measurable outcomes, and known risk profiles. Consider starting with the complex and high-risk use cases first to test in the Sandbox.

Change the delivery rhythm: Prototype the process, not just the model. Test governance flows, dashboards, decision thresholds, and escalation paths early. These metrics become the alignment mechanism for leadership, risk, and delivery teams.

Design the employee and customer experience: Be intentional about what trust looks like in practice - for both internal teams and end customers.

Building and scaling AI within regulated environments demands delivery models that go beyond traditional agile or transformation playbooks. Generative AI requires new delivery processes with explicit checkpoints and rapid prototyping – it is key that your team and your partners make, iterate and test across people, processes and AI continuously.

Watch Sorai's AI Sandbox in Action

Sorai is now conducting in-person and virtual demos of our AI Sandbox, showing how organisations can design, test, and scale AI decisions with confidence.

In these demos, we:

Demonstrate our proven approach to developing the sandbox

Show you how our sandbox works for some of the key use cases, including KYC, fraud and property valuation

Share our thinking and experience in financial services and AI

Create space to answer any of your questions openly and honestly

This is a working session designed to help you understand what responsible, explainable AI looks like when it is put to work.

If you are thinking seriously about introducing AI into your organisation, or pressure-testing what you already have, we would welcome the conversation.

Reach out to Niharika, Sorai's Global Digital Ventures and Product Strategy Lead, to arrange a demo: Niharika@soraiglobal.com.

Comments